As AI agents—powered by large language models (LLMs)—become more sophisticated and autonomous, managing them in real-world applications demands a new operational paradigm. Traditional DevOps and MLOps frameworks are not fully equipped to handle the unique challenges posed by intelligent agents that reason, decide, and interact with complex systems. This is where AgentOps steps in.

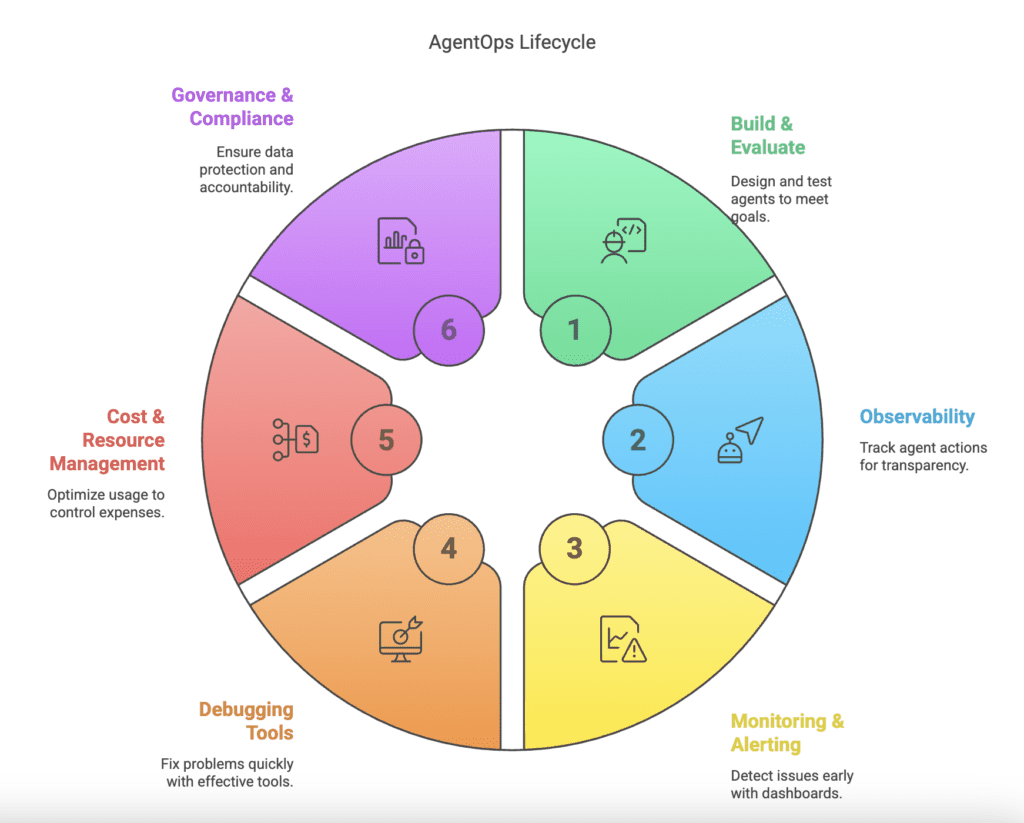

AgentOps is the emerging discipline and tooling ecosystem focused on the building, observability, debugging, monitoring, and governance of AI agents throughout their lifecycle. It brings the rigor of DevOps into the dynamic, unpredictable world of autonomous agents.

Why AgentOps?

AI agents, built with LLMs like GPT-4 or Claude, are designed to perform complex multi-step tasks, often involving reasoning, planning, and API interactions. They’re increasingly used in domains like customer service, RPA, data analysis, healthcare, and software engineering.

However, these agents differ significantly from traditional software systems or static ML models:

- They are non-deterministic, meaning their outputs can vary for the same inputs.

- They evolve over time as their prompts, context, and tool usage change.

- They operate autonomously, making decisions in real time based on environment feedback.

Such properties make them difficult to monitor, debug, and scale safely using conventional tools.

AgentOps addresses this gap by introducing practices and tools that:

- Provide visibility into agent behavior

- Enable root cause analysis of failures

- Help manage cost and performance

- Ensure compliance and auditability

Core Components of AgentOps

AgentOps can be broken down into five core components—each essential to the agent lifecycle: Build & Evaluate, Observability, Monitoring & Alerting, Debugging Tools, Cost & Resource Management, and Governance & Compliance.

1. Build & Evaluate

Foundation for Success:

Before any agent goes live, it must be carefully built and evaluated. This stage covers:

- Design & Prototyping: Developers design the agent’s purpose, define its decision-making logic, and create initial prototypes. Clear objectives ensure that the agent is engineered to meet specific business needs.

- Evaluation & Testing: Rigorous evaluation involves A/B testing different prompts, iterative prototyping, and simulated real-world scenarios. Performance metrics—such as response accuracy, efficiency, and error rate—are used to benchmark the agent.

- Feedback Integration: Evaluation isn’t static; it involves continuous feedback from both development teams and early users. This iterative process helps fine-tune the agent’s reasoning chains, ensuring it meets reliability and performance standards before production deployment.

A solid build and evaluation stage lays the groundwork for a stable, efficient, and robust agent, reducing the risks during later stages of monitoring and operations.

2. Observability

Gaining Deep Visibility:

Once built and evaluated, agents need robust observability. This involves:

- Session Replays: Visual representations of each agent’s decision chain, illustrating every step from input through to final output.

- Prompt & Response Logging: Detailed logs of every interaction the agent has, allowing teams to understand not just what the agent does, but how it reaches its conclusions.

- Tool Usage Tracking: Documentation of each API call or tool invocation used by the agent, ensuring that every external interaction is logged.

- Token & Cost Metrics: Real-time tracking of token consumption and associated costs, vital for avoiding runaway expenses in LLM environments.

Observability provides the transparency required to audit agent behavior and ensure compliance with quality standards.

3. Monitoring & Alerting

Ensuring Stable Operation:

Effective monitoring involves continuous oversight of agent behavior and performance:

- Real-Time Dashboards: Visual interfaces display key performance indicators such as response times, error rates, and overall throughput.

- Anomaly Detection & Alerts: Automated systems flag unusual behavior or deviations from established baselines. For example, alerts might be triggered by sudden spikes in token usage or unexpected delays.

- Baseline Performance: Defining standard operational parameters helps in recognizing performance regressions early and implementing corrective measures promptly.

Monitoring and alerting play a critical role in maintaining high availability and reliability of AI agents in production.

4. Debugging Tools

Rapid Troubleshooting:

Debugging AI agents requires specialized tools tailored to the complexity of multi-step processes:

- Tracebacks: Detailed step-by-step records of the agent’s reasoning and actions aid in pinpointing where errors occur.

- Session Comparisons: Comparing sessions that succeeded versus those that failed can provide insight into subtle issues.

- Prompt Versioning: Tracking changes in prompt configurations over time helps developers understand the impact of modifications.

- Replay & Fork Options: These allow teams to re-run parts of the agent’s workflow with alternative parameters for thorough troubleshooting.

Debugging tools are indispensable for refining agent behavior and ensuring continuous improvement in performance.

5. Cost & Resource Management

Optimizing Spend and Efficiency:

Controlling operational costs is essential, particularly given the high cost per LLM call:

- Token Usage Tracking: Detailed metrics help identify inefficient prompt strategies or redundant API calls.

- API Call Breakdown: Analysis of agent interactions can reveal areas for optimization, reducing unnecessary expenditure.

- Resource Optimization: Continuous assessment ensures that agents use computational resources efficiently, leading to predictable cost behavior in production.

Proper resource management leads to a sustainable operational model that balances performance with budget constraints.

6. Governance & Compliance

Maintaining Accountability and Security:

As AI agents interact with sensitive data and critical systems, governance is vital:

- Audit Logs: Immutable records of all decision-making processes provide accountability.

- Access Controls: Secure logging practices ensure that sensitive information (such as personally identifiable information) is protected.

- Prompt Change Tracking: Version histories document changes over time, making it easier to comply with regulatory requirements.

- Compliance Monitoring: Regular audits and controls help ensure that the agent adheres to industry standards and regulatory mandates.

Governance and compliance are essential for building trust with stakeholders and maintaining operational integrity.

Integrations with Agent Frameworks

Modern AgentOps platforms are designed to work with popular frameworks like:

- LangChain

- CrewAI

- AutoGen (AG2)

- MetaGPT

- ReAct, Toolformer, and other agent paradigms

With minimal code additions (often 2–5 lines), developers can start instrumenting their agents to send logs and telemetry data to an AgentOps backend. This ensures that observability is baked into the development process from the beginning.

AgentOps vs DevOps vs MLOps

| Feature | DevOps | MLOps | AgentOps |

|---|---|---|---|

| Target | Applications | ML models | Autonomous agents |

| Observability | Logs, metrics | Model drift | Reasoning steps, tool usage |

| Monitoring Focus | Server health | Model performance | Agent behavior, token usage |

| Debugging | Stack traces | Model outputs | Session replay, tracebacks |

| Cost Tracking | Infra usage | GPU hours | LLM token & API call costs |

| Versioning | Code | Model/pipeline | Prompts, workflows |

Challenges Ahead

While AgentOps is a powerful concept, it’s still evolving. Key challenges include:

- Standardizing telemetry formats across frameworks

- Scaling observability across hundreds or thousands of concurrent agent sessions

- Maintaining privacy while storing logs that may contain sensitive information

- Debugging stochastic behavior, which may not be repeatable

Nonetheless, as more organizations deploy agents in production, the need for robust AgentOps solutions will only grow.

Conclusion

AgentOps is not just a new tool—it’s a new way of thinking about operational excellence for intelligent, autonomous systems. As LLM-powered agents continue to reshape industries, teams must adapt their practices to ensure these systems are safe, efficient, and accountable. Whether you’re building customer-facing AI assistants or backend task automators, investing in AgentOps tooling can significantly reduce operational risks and improve system reliability.

As we shift from experimentation to production-scale deployment of AI agents, AgentOps will become the backbone of responsible, scalable AI operations.