The Stable Diffusion model is a Generative AI approach that transitions from a simple prior distribution to the data distribution using latent diffusion, specifically designed for text-to-image generation. It outperforms other systems with a process involving forward diffusion (adding noise to deviate from the prior distribution), reverse diffusion (denoising to approach the original state), conditioning (using text prompts through a text encoder), and sampling (generating the final image from the denoised state). This method ensures the creation of high-quality, text-guided images, making it superior to other state-of-the-art text-to-image generation systems.

Understanding Stable Diffusion

The Stable Diffusion model represents a paradigm shift in Generative AI, harnessing a latent diffusion approach to seamlessly transition from a simple prior distribution to a complex data distribution. At its core, Stable Diffusion is designed for text-to-image generation, offering unparalleled performance compared to conventional approaches.

Let us understand the working of stable diffusion model and how does it generate images from texts.

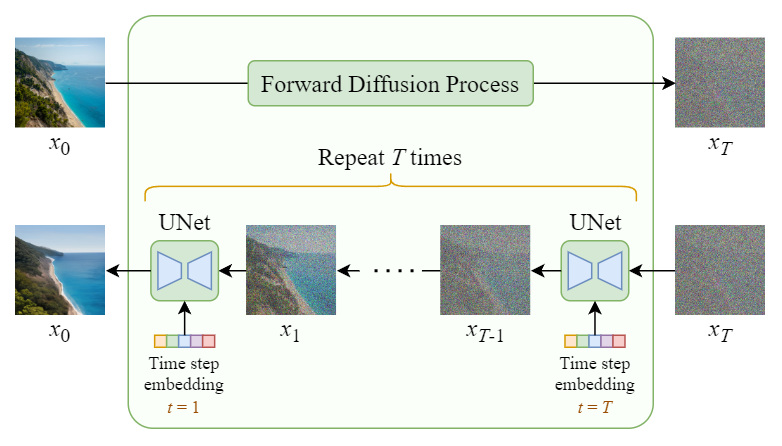

Step 1: Forward Diffusion Process

Initialization

The journey commences with the initialization step, wherein the model establishes a rudimentary prior distribution, often a Gaussian distribution. Concurrently, a random noise vector, denoted as x0∼q, is introduced, serving as the catalyst for the diffusion process.

Noise Addition

As the diffusion unfolds, noise is incrementally added to the current state xt through a series of invertible transformations. This process is facilitated by the U-Net architecture, which processes the input at multiple scales, capturing different levels of detail and progressively adding complexity to the evolving image state. Each transformation augments the state, progressively diverging it from the initial distribution. This iterative noise addition imbues the evolving image with texture and complexity, akin to brushstrokes on a canvas.

Step 2: Reverse Diffusion Process

Denoising

Following the forward diffusion, the reverse diffusion process commences, aiming to reverse the noise introduced earlier. This denoising procedure, again employing the U-Net architecture, involves applying the inverse transformations in reverse order. U-Net’s skip connections allow the model to retain important features from the initial stages and combine them with later stages, gradually restoring the image to its original state. It’s akin to smoothing out imperfections and refining details, ensuring clarity and coherence.

Step 3: Conditioning

Guiding the Generation

Central to the image generation process is conditioning, wherein the model is guided by a textual prompt. Leveraging a text encoder, such as a frozen CLIP ViT-L/14 model, the textual input is converted into a text embedding. This embedding acts as a contextual cue, informing the diffusion process and aligning the generated image with the provided text prompt.

Step 4: Sampling Procedure

Unveiling the Masterpiece

With the image fully conditioned and refined, the sampling procedure commences. The model samples from the denoised state xT to produce the final image. Beginning with the denoised state obtained from the reverse diffusion process, the model iteratively applies reverse transformations until the desired image is synthesized. This iterative sampling allows for the exploration of diverse image possibilities, resulting in a tailored output aligned with the provided text prompt.

The Architecture

Leveraging Multimodal Diffusion Transformer (MMDiT)

The Stable Diffusion model leverages a Multimodal Diffusion Transformer (MMDiT) architecture, comprising distinct sets of weights for image and language representations. This architecture enhances text understanding and spelling capabilities, facilitating more nuanced and contextually relevant image generation compared to previous iterations.

Training Paradigm

The Two-Stage Approach:

Training the Stable Diffusion model entails a two-stage process. Initially, an autoencoder compresses image data into a lower-dimensional latent space. Subsequently, the diffusion model is trained within this latent space to generate high-quality images conditioned on textual input. This training paradigm enables the model to learn intricate correlations between text and image features, enhancing its generative capabilities.

Applications and Advantages

The versatility of the Stable Diffusion model extends across various applications, including text-to-image generation, image inpainting, class-conditional synthesis, unconditional image generation, and super-resolution tasks. Its superior performance in these domains, coupled with reduced computational requirements compared to traditional pixel-based models, underscores its significance in advancing AI-driven image generation.

Comparison to Other Models

When compared to other models like DALL-E 2, Imagen, and Veo, the Stable Diffusion model demonstrates competitive performance and offers distinct advantages, particularly in text-guided image generation tasks. Its latent diffusion approach, coupled with effective conditioning mechanisms, sets it apart as a leading contender in the field of generative AI.

Accessibility and Availability

The accessibility of the Stable Diffusion model is further augmented by its permissive licensing, ensuring widespread adoption and customization. Moreover, its capability for fine-tuning with minimal data, as demonstrated through transfer learning with as few as five images, renders it adaptable to diverse use cases and applications.

Final Words

In summary, the Stable Diffusion model stands as a testament to the ingenuity and innovation driving the field of generative AI. Through its sophisticated diffusion approach, effective conditioning mechanisms, and versatile architecture, it has emerged as a powerful tool for synthesizing high-quality images guided by textual input. As AI continues to push the boundaries of creativity and innovation, the Stable Diffusion model stands at the forefront, paving the way for new possibilities in the field of artificial intelligence.