Liquid Foundation Models (LFMs) represent a cutting‑edge paradigm in the evolution of artificial intelligence, departing from traditional transformer‑based architectures to embrace principles of continuous‑time dynamics and adaptive computation. Developed by Liquid AI and introduced in late 2024, LFMs are designed to operate as a unified, modality‑agnostic foundation, capable of handling text, audio, vision, time series, and more within a single coherent framework. By leveraging liquid neural network constructs—specifically liquid time‑constant (LTC) modules and state‑space formulations—LFMs achieve unprecedented efficiency in memory usage, inference speed, and multi‑modal integration. This article delves into the origins, architecture, key innovations, practical benefits, and future prospects of LFMs, illustrating why they mark a significant milestone in foundation model research.

Origins and Motivation

The genesis of Liquid Foundation Models traces back to early research on liquid neural networks and state‑space models, which explored how continuous‑time dynamics could be harnessed for learning sequential patterns more efficiently than discrete‑layer counterparts. Traditional transformers, while powerful, face inherent limitations: self‑attention mechanisms scale quadratically with sequence length, fixed-weight layers lack dynamic adaptability, and discrete architectures often demand separate embedding pipelines for different data modalities. Recognizing these constraints, researchers sought an alternative that could:

- Adapt Computational Effort on the Fly – Allocate more or less processing resources depending on input complexity.

- Reduce Memory Footprint – Avoid the heavy O(N²) matrices characteristic of self‑attention.

- Unify Modalities – Treat text, vision, audio, and time series within the same representational and computational substrate.

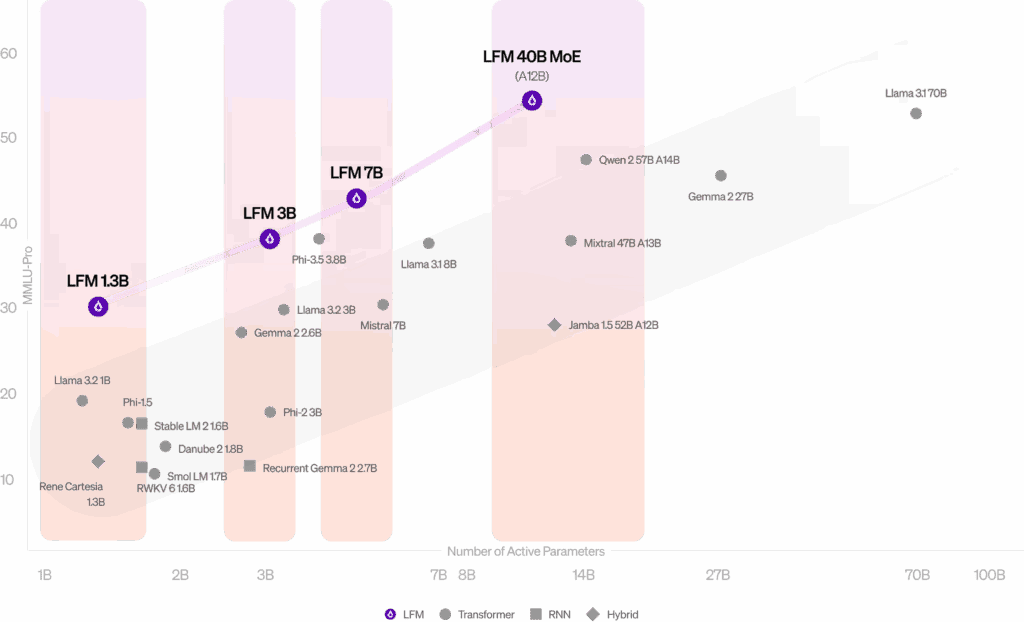

Liquid AI emerged as the first organization to translate these theoretical insights into a scalable foundation model series, culminating in the public release of three LFM variants—1 billion, 3 billion, and 40 billion parameters—each optimized for different deployment scenarios, from edge devices to large‑scale cloud environments.

Core Architectural Principles

At the heart of Liquid Foundation Models (LFMs) lie a few fundamental innovations:

- Liquid Time‑Constant (LTC) Modules

Unlike discrete transformer blocks, LTC modules model state transitions as differential equations whose time‑constants adapt dynamically to incoming data. Each module computes continuous updates to its hidden state, allowing the network to “flow” information in a smooth, time‑aware manner rather than in abrupt, layer‑by‑layer steps. - State‑Space Formulation

LFMs treat sequence modeling as learning the parameters of a continuous‑time state‑space system. Inputs are fed into differential equations that evolve the system’s hidden state, and outputs are read out at any time point. This representation naturally supports long‑range dependencies without incurring quadratic memory costs. - Adaptive Computation Time

Through mechanisms akin to learned gating, LFMs determine how many internal update steps to perform for each input element. Simple or redundant inputs may prompt fewer updates, while complex tokens trigger more intensive computation—optimizing inference speed and energy usage. - Unified Feature Space

Instead of crafting separate embedding and encoder networks for text, vision, and audio, LFMs map all modalities into a common continuous feature space. This enables true cross‑modal reasoning, such as conditioning text generation on visual context or synthesizing audio based on time‑series signals, all within the same model instance.

LFMs Performance

(LFMs offer a new best performance/size tradeoff in the 1B, 3B, and 12B (active parameters) categories)

How Liquid Foundation Models (LFMs) Differ from Transformer LLMs

| Aspect | Liquid Foundation Models | Transformer LLMs |

|---|---|---|

| Computational Paradigm | Continuous‑time state‑space dynamics | Discrete layers with self‑attention |

| Attention Mechanism | Implicit, feature‑dependent mixing via LTC kernels | Explicit self‑attention matrices (O(N²)) |

| Parameter Adaptivity | Weights and time‑constants adapt per input | Fixed weights; same processing for every token |

| Memory & Compute | Linear scaling with sequence length; lower memory | Quadratic memory scaling for long contexts |

| Modal Integration | Single unified feature space for all modalities | Separate embedding heads and external encoders |

| Inference Efficiency | Dynamic compute allocation; lower latency | Uniform per‑layer computation; potential inefficiency |

These differences translate into tangible benefits. LFMs can process very long sequences—such as full-length movies or extensive sensor logs—without prohibitive memory costs. Their adaptive computation means they often achieve similar or superior accuracy to transformer models while requiring fewer floating‑point operations. Moreover, the unified modality design simplifies development pipelines: a single LFM can replace a text‑only LLM plus separate vision‑and‑audio models.

Practical Benefits and Applications

- Edge and On‑Device AI

Smaller LFM variants (e.g., 1 billion parameters) can run on resource‑constrained hardware—smartphones, IoT sensors, or embedded devices—delivering powerful generative and predictive capabilities without constant cloud connectivity. - Enterprise‑Grade Deployment

Organizations deploying models on‑premises benefit from LFMs’ reduced memory footprint and adaptive inference, cutting infrastructure costs and carbon footprints while maintaining high throughput for tasks like document summarization, anomaly detection, and multi‑modal customer support. - Streaming and Real‑Time Processing

The continuous‑time nature of LFMs makes them ideal for streaming scenarios—financial tick data, live audio transcription, or sensor‐driven control systems—where the model continuously updates its state as new data arrives, without the need for windowed batching. - Cross‑Modal Generation

LFMs shine in applications requiring seamless integration of media types. For example, generating narrated video summaries from surveillance footage, creating synchronized music from motion capture data, or translating sign language videos into text in real time. - Research and Fine‑Tuning

The adaptable structure of LFMs lends itself to parameter‑efficient fine‑tuning: researchers can adjust time‑constant parameters or gating mechanisms for specialized tasks without retraining the entire network, enabling rapid experimentation and domain adaptation.

Challenges and Considerations

While LFMs offer impressive advantages, they also introduce new complexities:

- Differential Equation Solvers

Training and inference involve solving continuous‑time equations, which may demand specialized numerical routines and careful stability analysis. - Hyperparameter Sensitivity

Parameters governing time‑constants, gating thresholds, and solver tolerances require meticulous tuning, potentially increasing model development overhead. - Ecosystem Maturity

Tooling and community support for transformer models remain far more extensive; LFMs require updated libraries and educational resources to reach the same level of accessibility.

Despite these hurdles, Liquid AI has invested heavily in developer tooling—open‑source SDKs, pretrained checkpoints, and conversion scripts—that lower the barrier to entry, fostering a growing ecosystem around LFMs.

Future Directions

The advent of LFMs heralds a broader shift toward models that integrate continuous‑time dynamics with discrete attention, combining the best of both worlds. Possible future innovations include:

- Hybrid Architectures: Seamlessly interleaving LTC modules with self‑attention blocks to capture both local adaptability and global context interactions.

- Larger‑Scale LFMs: Pushing parameter counts into the hundreds of billions while maintaining efficiency gains, challenging the dominance of giant transformer models.

- Auto‑Differentiated Solvers: Incorporating learnable solvers that adapt their numerical strategies during training, further optimizing compute and accuracy.

- Open‑Source Community Growth: As more researchers contribute to LFM toolkits and benchmarks, standards will emerge, making continuous‑time foundation models a staple in the AI toolkit.

Final Words

In summary, Liquid Foundation Models represent a technological leap, offering a fluid, adaptive, and efficient alternative to transformer‑based systems. By redefining how neural networks process sequential and multi‑modal data, LFMs unlock new possibilities in AI deployment—from real‑time edge analytics to seamless cross‑modal content generation—while charting a promising path for the next generation of intelligent systems.