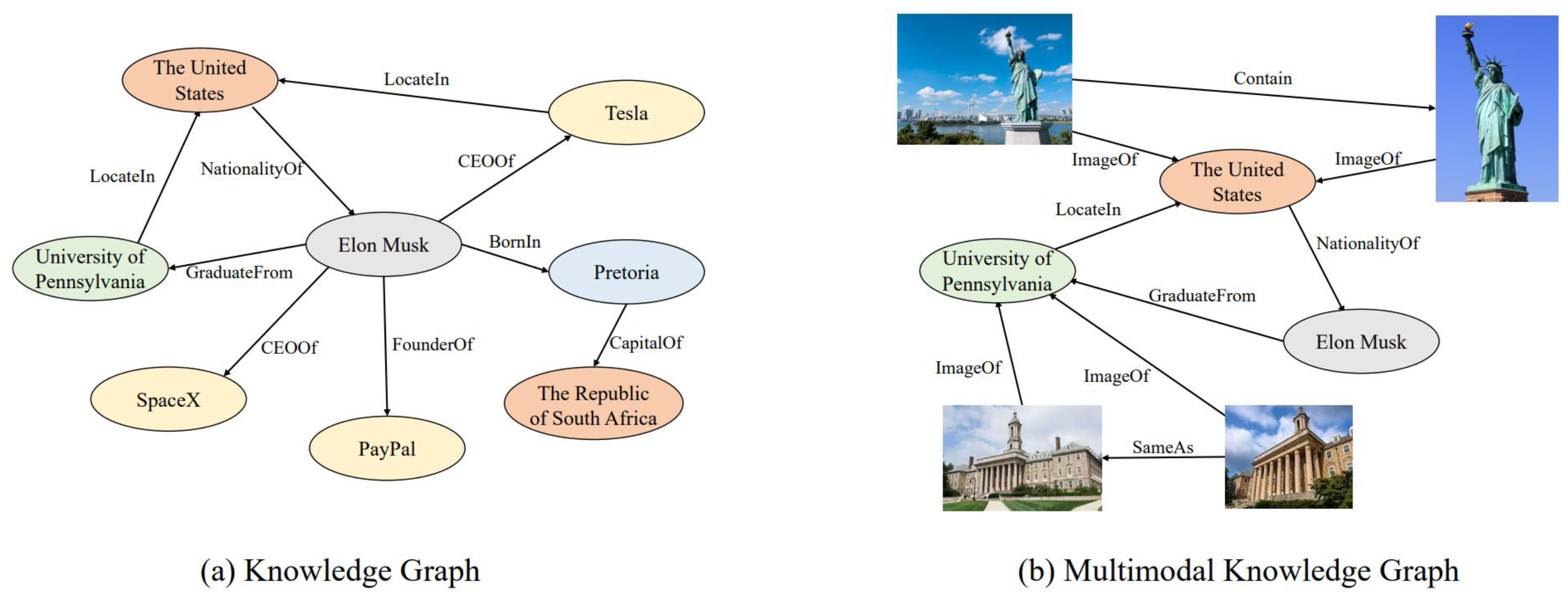

In today’s data-driven world, the ability to integrate and utilize diverse types of data is crucial for creating intelligent systems. Traditional knowledge graphs excel at organizing and representing structured knowledge from textual sources, but they often fall short when it comes to handling multi-modal data that includes images, sound, and video. This is where Multimodal Knowledge Graph comes into play. Multimodal Knowledge Graph is designed to integrate structured knowledge from various modalities, enabling a more comprehensive and nuanced understanding of complex concepts and relationships. This article delves into the definition, construction, applications, and challenges of Multimodal Knowledge Graphs.

Definition and Structure

A Multimodal Knowledge Graph is defined as a graph structure G={E,R,A,T,V}G = \{E, R, A, T, V\}G={E,R,A,T,V}, where:

- E represents entities or concepts.

- R denotes relations between entities.

- A stands for attributes or properties of entities.

- T is a tuple containing two components:

- TA: The attribute values of entities, which can include multi-modal data such as images.

- TR: The triples of entities, relations, and entities, defining the relationships between entities.

- V represents the modalities or data sources used to construct the Multimodal Knowledge Graph, including text, images, sound, and video.

Construction of Multimodal Knowledge Graph

A Multimodal Knowledge Graph can be constructed in two primary ways:

- Attribute-based Multimodal Knowledge Graphs: In this approach, multi-modal data (e.g., images) is used as specific attribute values for entities or concepts. For example, images can be labeled with knowledge graph symbols, and symbol-image grounding can be used to establish relationships between entities and images. This method integrates visual data directly into the attributes of entities.

- Node-based Multimodal Knowledge Graphs: Inspired by the work of Zhu et al., this method treats images (and other modalities) as independent entities with direct relationships to other entities. This approach allows for more flexible and dynamic representation of multi-modal data, as it does not confine the data to attribute values but considers them as entities that can interact with other entities in the graph.

An Example of a Knowledge Graph

Applications of Multimodal Knowledge Graphs

Multimodal Knowledge Graphs have numerous applications across various domains:

- Image Classification: By integrating visual and textual information, Multimodal Knowledge Graphs can improve image classification accuracy. Images can be labeled with knowledge graph symbols, and symbol-image grounding can establish relationships between entities and images, leading to more precise classification.

- Visual Question Answering (VQA): Multimodal Knowledge Graphs can integrate visual and textual information to answer complex questions that require both commonsense and encyclopedic knowledge. For example, when asked about the objects in an image and their relationships, Multimodal Knowledge Graphs can leverage both visual data and textual descriptions to provide accurate answers.

- Image Captioning: Combining visual and semantic information from images, Multimodal Knowledge Graphs can generate more accurate and descriptive captions. This involves understanding the entities and their relationships within an image and translating that understanding into coherent text.

- Scene Graph Generation: Multimodal Knowledge Graphs can derive semantic relationship features from entity triples for scene graph generation, which is essential for tasks like image captioning and visual question answering. Scene graphs represent objects in an image and their relationships, providing a structured representation that can be used for various downstream tasks.

- Multi-modal Named Entity Recognition (NER) and Relation Extraction: Multimodal Knowledge Graphs can recognize and extract entities and relationships from multi-modal data, such as text and images. This capability is particularly useful in domains where information is presented in diverse formats, such as news articles or social media posts.

- Multi-modal Event Extraction: Multimodal Knowledge Graphs can extract events and relationships from multi-modal data, identifying significant occurrences and their contexts. This is valuable for applications in surveillance, journalism, and event monitoring.

- Multi-modal Entity Alignment: Multimodal Knowledge Graphs can align entities across different modalities, such as text and images, to improve entity recognition and disambiguation. This alignment ensures that the same entity is consistently identified and linked across various data sources.

- Industrial Applications: In industrial settings, Multimodal Knowledge Graphs can enhance product recommendation systems by integrating multi-modal data, leading to more accurate and personalized recommendations. For example, a recommendation system can use product images, descriptions, and user reviews to suggest relevant products to customers.

Challenges and Opportunities

While Multimodal Knowledge Graphs offer significant benefits, they also present several challenges:

- Construction and Acquisition: Building Multimodal Knowledge Graphs can be complex and time-consuming, particularly when dealing with large-scale datasets. Collecting, annotating, and integrating multi-modal data requires substantial effort and resources.

- KG4MM Tasks: Integrating knowledge graphs with multi-modal learning tasks can be challenging due to the need to align and fuse information from different modalities. Ensuring that the information from text, images, sound, and video is coherently integrated is a non-trivial task.

- MM4KG Tasks: Extending knowledge graphs to handle multi-modal data necessitates the development of new algorithms and techniques for constructing and reasoning with Multimodal Knowledge Graphs. This involves creating methods for effectively representing and manipulating multi-modal information.

- Integration with Large Language Models (LLMs): Combining Multimodal Knowledge Graphs with large language models is challenging due to the need to align and fuse information from different modalities and handle the complexity of large-scale datasets. Large language models like GPT-4 can benefit from the structured information in Multimodal Knowledge Graphs, but integrating the two requires sophisticated techniques to manage the interplay between textual and non-textual data.

Conclusion

Multimodal Knowledge Graph is a powerful tool for integrating structured knowledge from various modalities, enabling a more comprehensive and nuanced understanding of complex concepts and relationships. They have numerous applications across diverse domains, offering significant benefits such as improved accuracy and personalization. However, the construction and acquisition of Multimodal Knowledge Graphs, the alignment and fusion of information, and the integration with large language models present substantial challenges. Addressing these challenges requires ongoing research and development, but the potential rewards make Multimodal Knowledge Graphs a promising area of study in the field of artificial intelligence and knowledge representation.